Follow us on Google News (click on ☆)

These robots orient themselves based on the silhouettes of their neighbors, which may appear larger, smaller, or move within their visual field. This study demonstrates that the robots accurately reproduce the collective movements observed in animals.

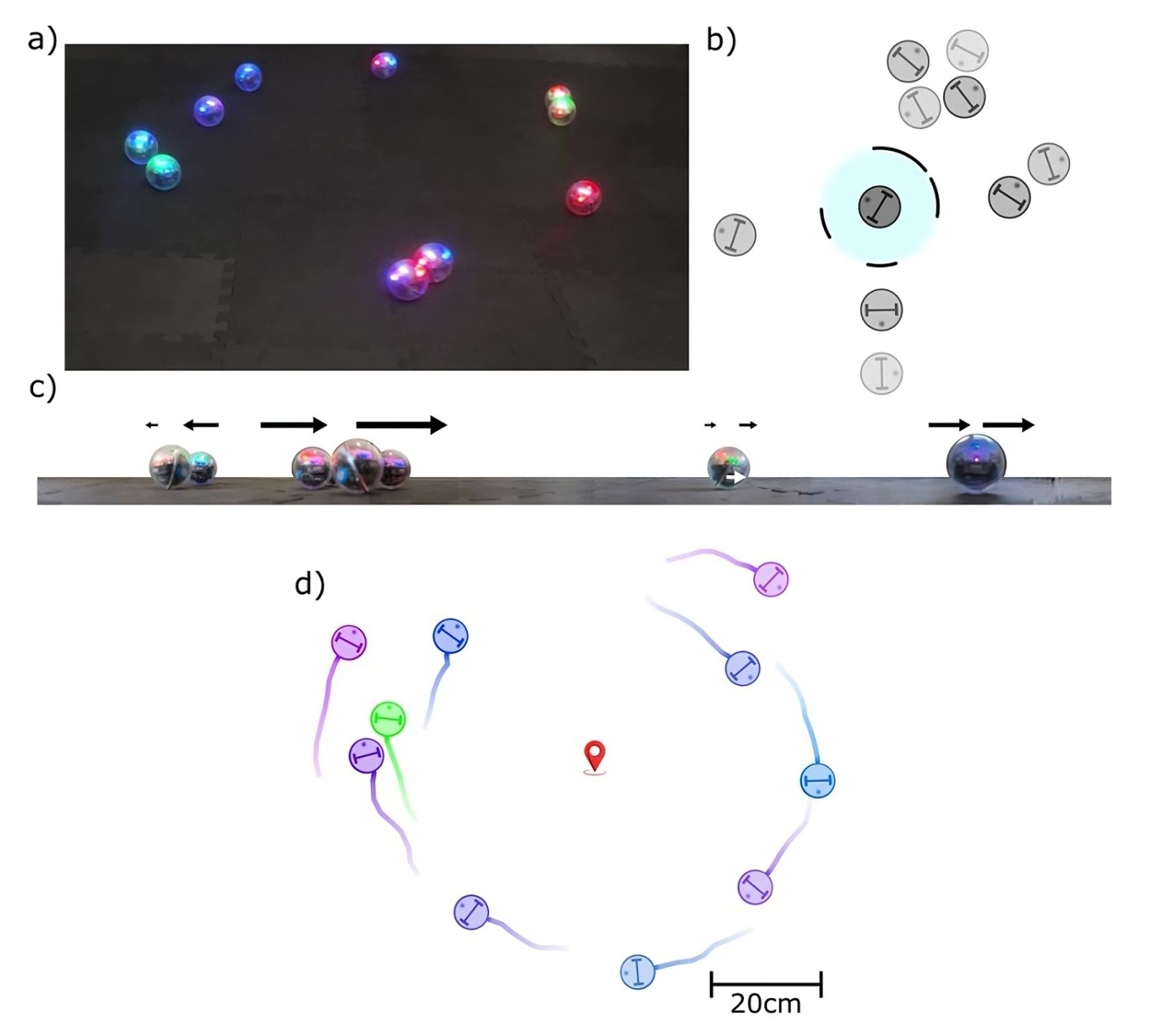

a) The swirling collective movement is reproduced by the spherical robots.

b) The primary vision of neighboring silhouettes is emulated on each robot.

c) The perceived silhouettes can grow, shrink, or scroll across the visual field.

d) Adding an anchor allows the robotic collective movement to be contained within a limited space.

© Castro, Eloy, Ruffier (Creative Commons Attribution 4.0)

Drawing inspiration from the collective movements of animals...

Imagine a flock of birds crossing the sky or a school of fish swimming in perfect harmony. These fascinating movements, long studied by scientists, can now be replicated in simulations and with robots using only visual information.

These movements are called collective behaviors. Traditionally, these behaviors have been modeled without considering the biological visual system (or natural vision) of individuals. Thus, most models rely on knowing the distance between neighbors, which is not the case for animals.

...to create a new model...

In an article published in the journal Bioinspiration & Biomimetics, scientists developed a new model. It does not rely on artificial distance measurements but instead uses, like animals, primary visual cues to apply a set of four rules followed independently by all group members.

This model assumes that each individual only sees the silhouettes formed by others, without recognizing the individuals themselves. They are represented by their silhouette in each individual's visual field, creating primary visual cues as the silhouettes move.

The four rules are as follows:

- Attraction: This rule represents the group's natural desire to stay together. Without attraction, individuals would drift apart. It is implemented by considering the optical size of each silhouette.

- Alignment: This rule reflects the group's tendency to move in the same direction. Without alignment, individuals would struggle to follow the group. It works by measuring what is called "optical flow," which describes the visual scrolling around the individual.

- Avoidance: This rule, introduced to prevent collisions between robots, reflects the individual tendency to avoid nearby robots. It is implemented by adjusting attraction based on a threshold for the optical size of each silhouette.

- Anchoring: This rule was added because the space available for robotic experiments is always limited. It reflects the confinement of groups to a specific location. This rule works similarly to the attraction rule but applies to a virtually defined key location.

The robotic implementation introduces a delay between the command sent to the robot and the start of its movement—this delay was also integrated into the simulation to remain comparable.

...advancing autonomous robotics.

This work uses a visual model of collective movement with spherical robots where each robot's vision is emulated. Thus, the robots reproduce these collective behaviors with a group of 10 independent spherical robots. This model has also helped bridge the gap between simulation and robotic experiments. Indeed, robotic experimentation always introduces uncertainties that are difficult to model precisely. Here, the simulated and robotic collective behaviors are nearly identical, even though they are driven by exactly the same visual model.

In conclusion, this minimal visual model of collective movement is sufficient to recreate most collective behaviors with spherical robots, which behave analogously to numerical simulations. This work represents a breakthrough for autonomous robotics, with potential applications in swarm robotics, search and rescue missions, and automated surveillance systems.